D1: Invasive Software-Hardware Architectures for Robotics

Principal Investigators:

Prof. T. Asfour, Prof. W. Stechele

Scientific Researchers:

Abstract

The main research topic of subproject D1 is the exploration of benefits and limitations of Invasive Computing for humanoid robotics. Our goal is to use complex robotic scenarios with concurrent processes and timely varying resource demands to show high-level invasion and negotiation for resources. Invasive computing mechanisms should allow for efficient resource usage and fast execution times while keeping resource utilisation and predictability of non-functional properties, e.g. power, dependability, security, at an optimal level. Therefore, research on techniques of self-organisation are key to efficient allocation of available resources in situations where multiple applications bargain for the same resources. To take full advantage of invasive computing, our algorithms will be able to run on different hardware configurations and support dynamic switching between them.

In the first funding phase, we defined a robotics scenario comprising several algorithms which are required for the implementation of visually guided grasping on a humanoid robot. A number of selected applications have been analysed to provide design specification requirements for projects of the A, B, and C areas. Fixed resources but changing load situations were used for initial evaluations of these algorithms on an invasive hardware platform. Based upon knowledge gained from these experiments, we established performance metrics which were used later on to adapt parameters of running applications in order to provide optimal throughput while available computing resources change. The mentioned adaptable parameters range from simple threshold values to more complex algorithm partitioning schemes. Exploiting information provided by a given invasive computing platform and combining it with the previously defined metrics allowed us to demonstrate improved application performance and quality as compared to state-of-the-art techniques.

In the second funding phase, we investigated resource-aware prediction models and algorithms and evaluated robotic applications on the demonstrator platform. Learning of resource-aware prediction models allows a context-aware and speculative resource management by providing hints about future resource requirements based on previous robot and system experience. We developed a novel resource-aware algorithm for collision-free motion planning that employs self-monitoring concepts in order to identify the difficulty of a given planning problem and dynamically adapt resource usage based on problem difficulty and current planning progress. Furthermore, we evaluated robotic applications on the demonstrator platform. We developed several strategies to map computer vision algorithms (Harris corner detection and SIFT feature extraction and matching) to homogeneous/heterogeneous MPSoCs and evaluated these algorithms on resource-aware programming models.

In the third funding phase, the research activities in Project D1 will focus, on building an invasive memory system—as integral part of a robot control architecture, which encodes prior world and task knowledge, sensorimotor experience and application resource requirements associated with robot actions and scene context. Such memory system will allow 1) encoding, storing and retrieving information about resource requirements, 2) learning prediction models of robot actions and their consequences (world state) as well as their computational resources and 3) implementing speculative resource management system based on resource-aware prediction models learned from experience. In contrast to Phase II, we will consider parallelism in robot actions as it is the case in bimanual tasks or in manipulation and locomotion tasks of a humanoid robot. The approach will be validated in the context of a comprehensive perception and affordance pipeline which combines a wide variety of robot vision algorithms for scene understanding and action selection. Furthermore, we will address the challenges posed by the legacy software of existing robotic systems as currently the code of used algorithms would require complete re-engineering in order to benefit from invasive computing. We will investigate methods for automatic porting of the non-invasive code of the perception and affordance to invasive multicore platforms.

Synopsis

The goals of the project in Phase III are the design of a resource-aware robot memory system as well as the developement of an invasive wrapper for legacy code. Furthermore, we will investigate resource-aware image processing on prosthetic hands.

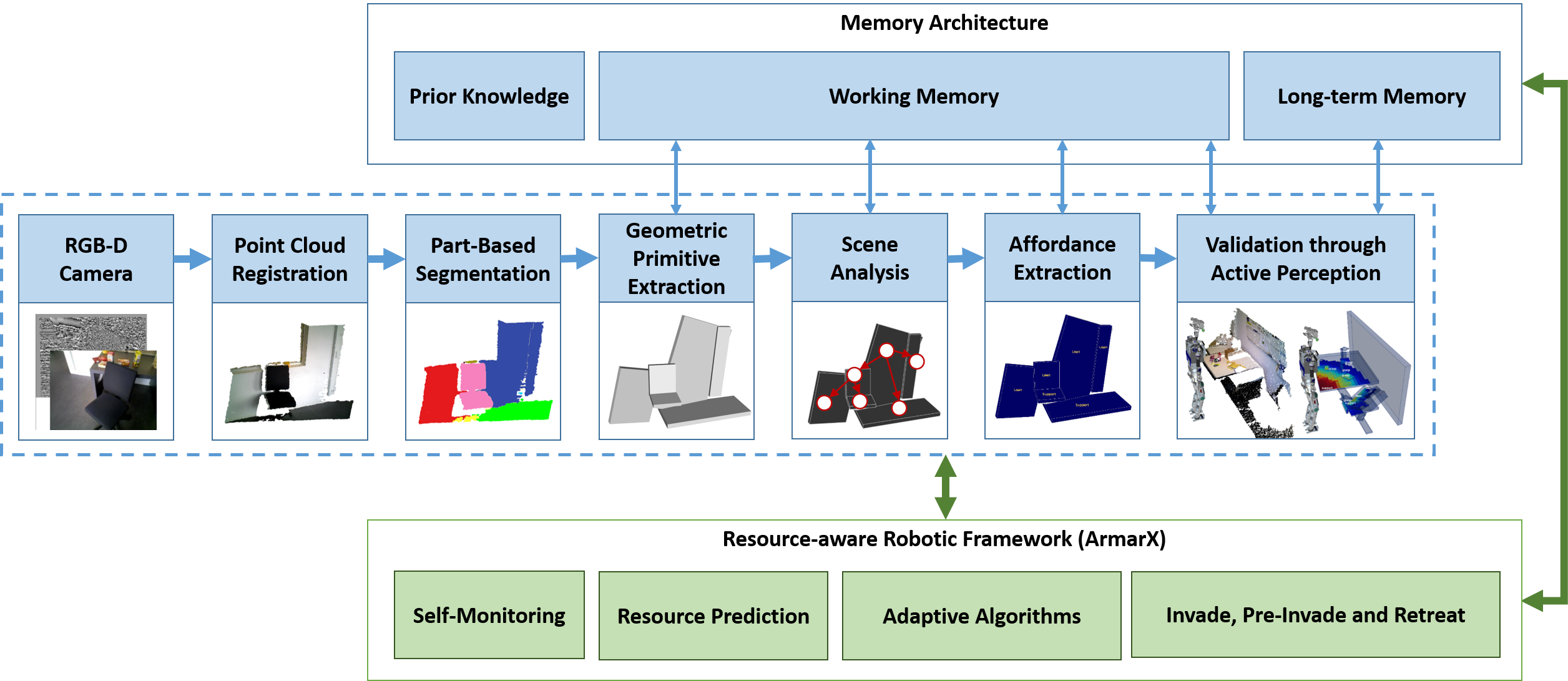

Resource-aware robot memory system: To perform whole-body grasping, manipulation and locomotion tasks in unknown environments, a humanoid robot needs to understand the environment, the objects compromising it as well as the interaction possibilities, i.e. the affordances of the current scene. Therefore, we will address the research question of using of active vision and active perception methods, by which a humanoid robot autonomously explores its environment and builds affordance-based scene representations which allow the selection of actions and control strategies to achieve the task goal. We will investigate how such representations can be enriched by information about application resource requirements associated with the involved algorithms, robot actions and scene contexts. To facilitate resource-aware action selection and speculative resource management, we will implement a resource-aware robot memory system consisting of 1) prior knowledge memory, 2) long-term memory with knowledge learned from experience and 3) working memory with knowledge about the current world state. We will investigate how resource awareness can improve system performance in the context of scene exploration, planning and execution of parallel robot actions such as locomotion and manipulation tasks. We will mainly focus on active scene exploration for the generation of whole-body actions, as schematically shown in Figure 0.7. During exploration, the robot needs to distribute resources between essential low-level control tasks, e.g. balancing, locomotion and collision-avoidance, high-level planning tasks and the actual scene exploration. On the one hand, a resource-aware active perception will aggressively use resources to improve scene understanding if, for example the robot is in a secure position and no expensive planning algorithm runs. On the other hand, if low-level control or high-level planning occupy most of the resources, the scene exploration will use the remaining resources adaptively to still produce a scene understanding with lower quality, i.e. with more uncertainty as well as lower spatial and temporal resolution.

Invasive wrapper for legacy code: There exists a large body of non-invasive code for robotic applications, e.g. within the aforementioned perception-action framework or the perception pipeline. Multicore platforms offer beneficial performance/power ratio, even if legacy code remains single-threaded. However, access conflicts on shared resources (memory, on-chip communication, I/O) might create large fluctuations in execution time, violating even soft real-time requirements. Traditionally, manual legacy code re- engineering is used to resolve such conflicts. Invasive mechanisms offer methods for application isolation and requirement enforcement. During Phase II, we have investigated manual integration of invasive mechanisms into legacy code. In Phase III we plan investigation of automatic methods. The goal is to investigate benefits and limitations of an invasive wrapper around robotic legacy code, in order to support porting to invasive multicore platforms.

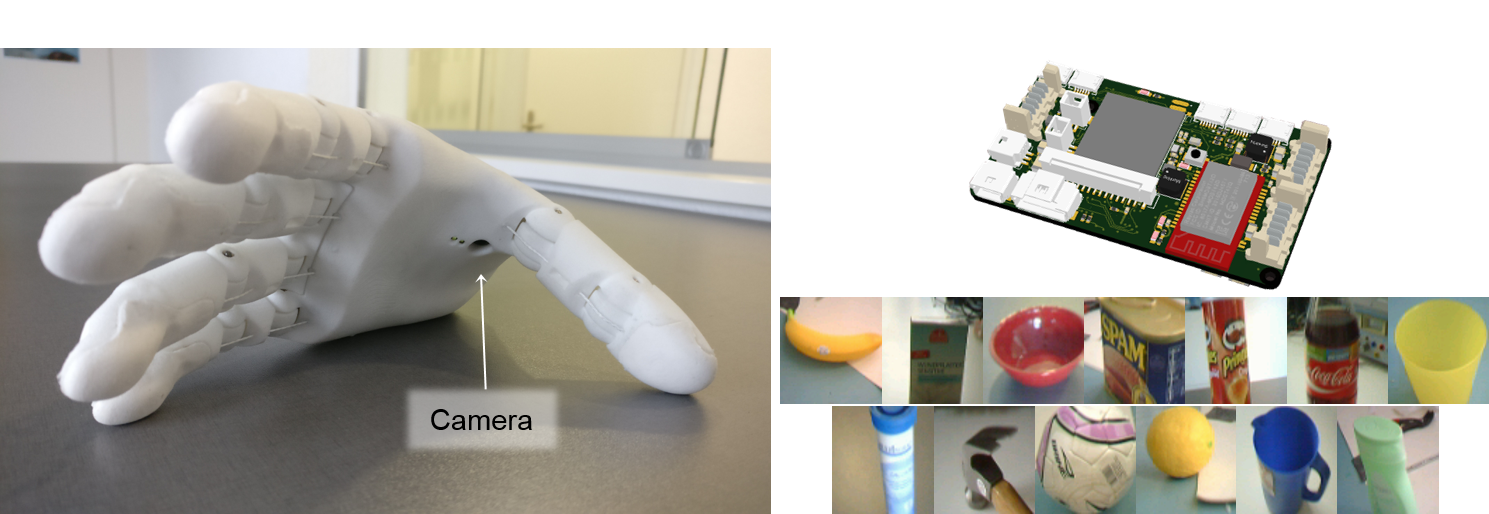

Image processing on the KIT prosthetic hand: We investigate how current progress in the image processing community, especially deep convolutional neural networks (CNNs), can be trained and deployed on resource-constrained hardware on a prosthetic hand. The applications we investigate are image classification and segmentation of known objects. The results from these stages are required for higher-level semi-autonomous control strategies which ease the mental burden for the user of the prosthesis. Together with other subprojects, D1 investigates how accelerators (FPGA, TCPA) can be implemented on the prosthesis' hardware and how invasive computing benefits in this resource-constrained context.

The KIT Prosthetic Hand has an in-hand camera which can perceive objects which the user wants to grasp. Using the built-in embedded compute system, the image can be classified and segmented.

Approach

Resource-aware prediction models for humanoid motion generation: In Phase II, we developed methods to predict world state changes and the corresponding resource requirements for a simple robotic tasks like a pick-and-place scenario. Such models allow to implement special pre-invade requests as a novel mechanism of the invasive computing concept. In order to demonstrate the benefits of resource prediction and invasive mechanisms on robotics applications, we will extend our work towards the generation of whole-body humanoid motion in unstructured environments. To this end, we rely on active perception approaches for scene exploration

The perception pipeline and the different parts it consists of are shown in blue. The goal is to derive a resource-aware realisation of the pipeline with its different components to allow for dynamic adaptation to external resource demands and varying problem complexity.

Resource-aware algorithms: In Phase II, we developed and evaluated novel resource-aware motion planning algorithms [Krö+16] with self-monitoring capabilities, which allow the identification of the underlying planning problem difficulty and to dynamically adapt resource usage based on the planning difficulty and progress. In Phase III, we will extend these adaptation mechanisms to consider the resource demands and priorities of different tasks of the robot. As an example, low-level control algorithms usually have high priority as they have to ensure smooth motion execution and safe operation for both the robot and humans interacting with it. However, a humanoid robot that is currently in a safe position with multiple stable support contacts does not need as many resources for whole-body motion generation and balancing compared to a robot moving through a cluttered environment. In contrast, high-level planning algorithms usually have low priority as their results are needed to complete a given task, and where a delay in providing such results does not compromise functionality.

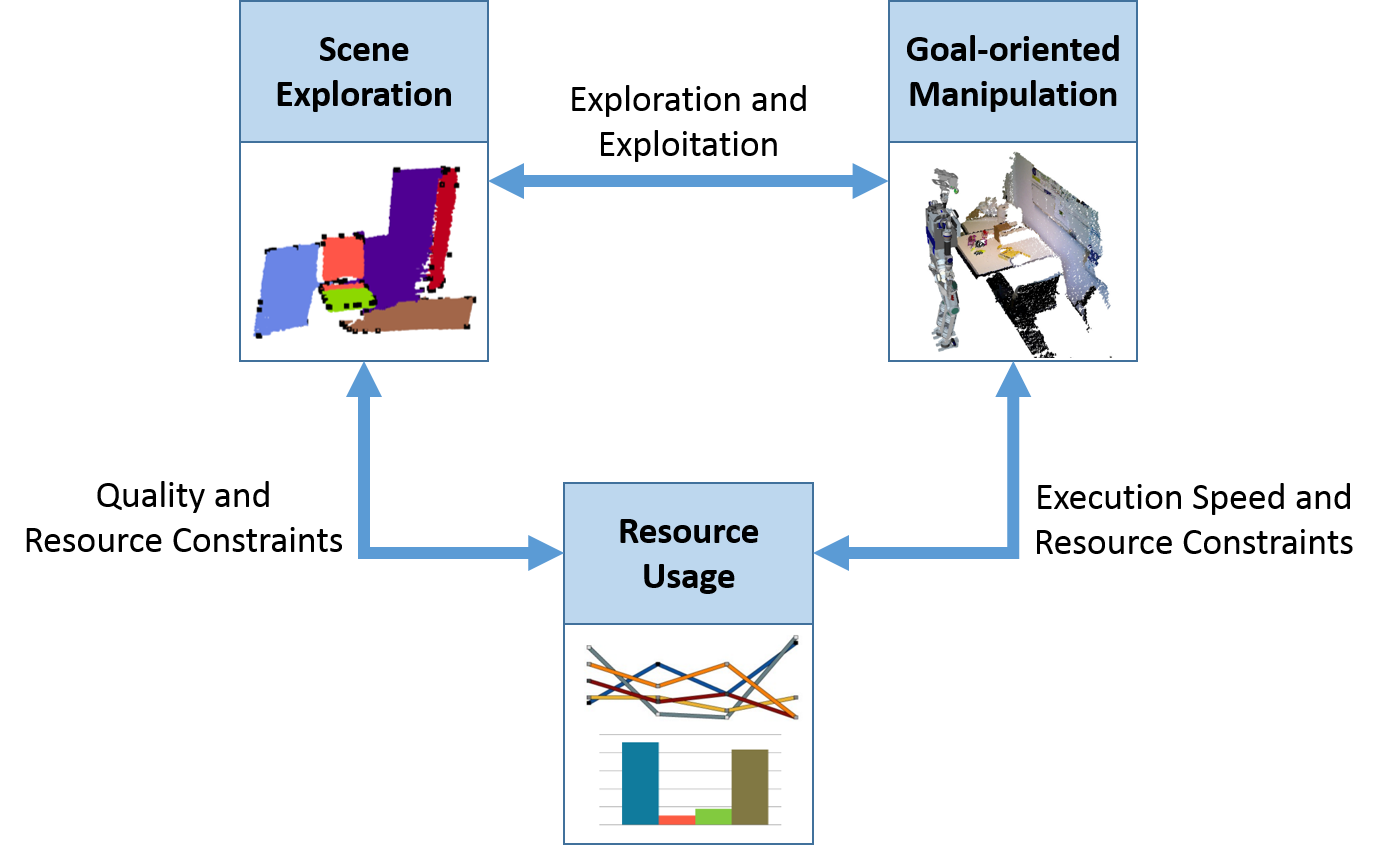

The optimisation cycle between scene exploration, goal-oriented manipulation and resource usage illustrates the contributing factors and how they influence each other.

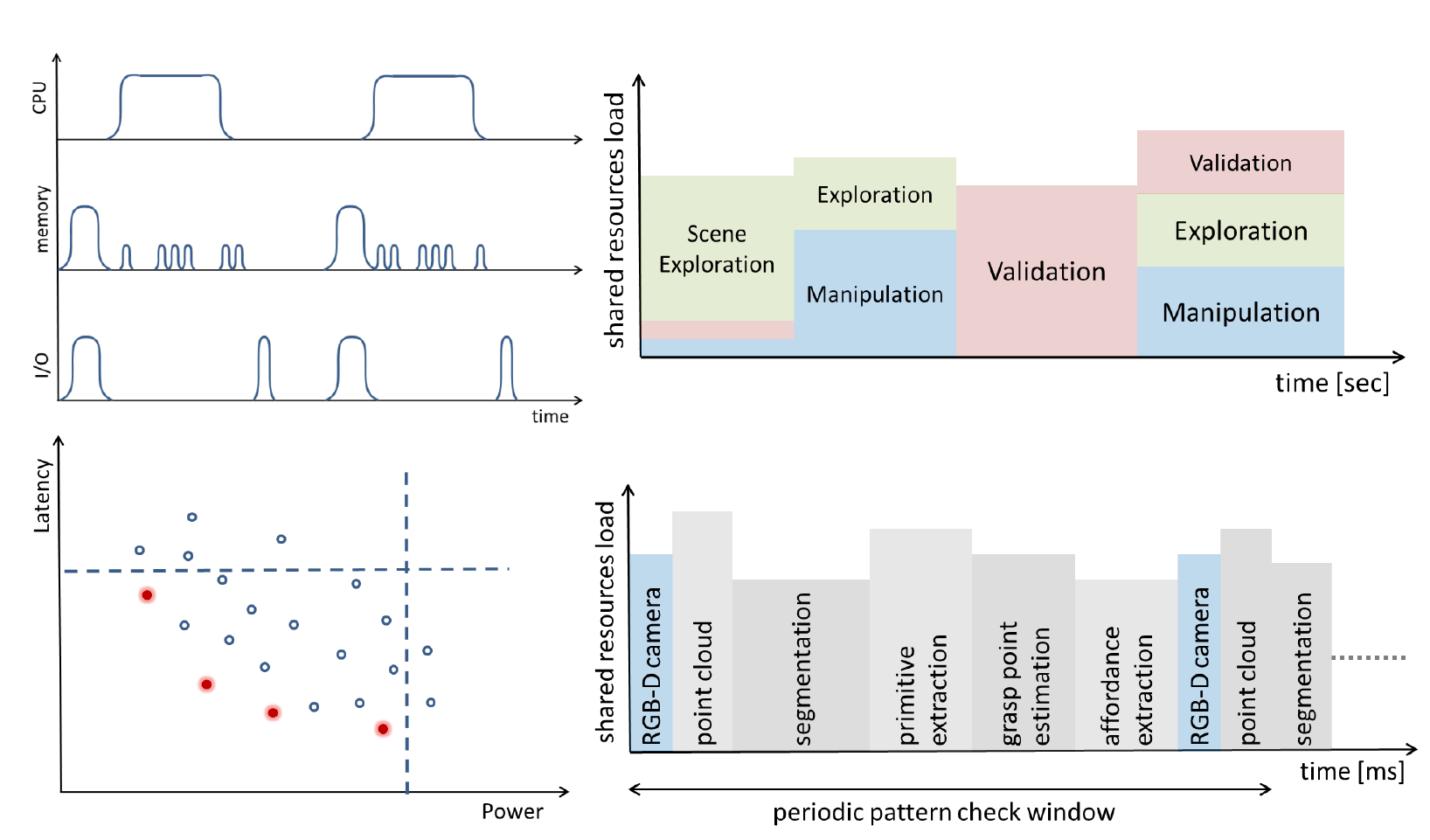

Invasive wrapper: During Phase II we investigated manual integration of invasive mechanisms into robotic vision applications. In Phase III, we plan to investigate automatic methods, applied to non-invasive code from the perception pipeline. The proposed approach for porting robotic legacy code to invasive multicore platforms consists of five steps, (a) profiling of non-invasive code, in order to identify application-specific access patterns of periodic tasks, (b) design space exploration on the target platform, in order to identify potential operating points, (c) shared resources partitioning, based on the application-specific access patterns, (d) establishing and configuring an invasive wrapper around the individual legacy applications, in order to isolate access to shared resources, and (e) run-time switching between operating points, in order to enforce end-to-end latency guarantees of legacy code on the invasive multicore platform.

Application profiling, design space exploration, and shared resources partitioning. Upper left: Expected profiling results. Lower left: Design space exploration indicating Pareto-optimal operating points and requirement boundaries. Upper right: Coarse-grained partitioning approach. Lower right: Fine-grained partitioning approach.

A comprehensive summary of the major achievements of the first and second funding phase can be found by accessing Project D1 first phase and Project D1 second phase websites.

Publications

| [1] | Nidhi Anantharajaiah, Tamim Asfour, Michael Bader, Lars Bauer, Jürgen Becker, Simon Bischof, Marcel Brand, Hans-Joachim Bungartz, Christian Eichler, Khalil Esper, Joachim Falk, Nael Fasfous, Felix Freiling, Andreas Fried, Michael Gerndt, Michael Glaß, Jeferson Gonzalez, Frank Hannig, Christian Heidorn, Jörg Henkel, Andreas Herkersdorf, Benedict Herzog, Jophin John, Timo Hönig, Felix Hundhausen, Heba Khdr, Tobias Langer, Oliver Lenke, Fabian Lesniak, Alexander Lindermayr, Alexandra Listl, Sebastian Maier, Nicole Megow, Marcel Mettler, Daniel Müller-Gritschneder, Hassan Nassar, Fabian Paus, Alexander Pöppl, Behnaz Pourmohseni, Jonas Rabenstein, Phillip Raffeck, Martin Rapp, Santiago Narváez Rivas, Mark Sagi, Franziska Schirrmacher, Ulf Schlichtmann, Florian Schmaus, Wolfgang Schröder-Preikschat, Tobias Schwarzer, Mohammed Bakr Sikal, Bertrand Simon, Gregor Snelting, Jan Spieck, Akshay Srivatsa, Walter Stechele, Jürgen Teich, Furkan Turan, Isaías A. Comprés Ureña, Ingrid Verbauwhede, Dominik Walter, Thomas Wild, Stefan Wildermann, Mario Wille, Michael Witterauf, and Li Zhang. Invasive Computing. FAU University Press, August 16, 2022. [ DOI ] |

| [2] | Zehang Weng, Fabian Paus, Anastasiia Varava, Hang Yin, Tamim Asfour, and Danica Kragic. Graph-based task-specific prediction models for interactions between deformable and rigid objects. In IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pages 5453–5460, 2021. |

| [3] | Nael Fasfous, Manoj-Rohit Vemparala, Alexander Frickenstein, Mohamed Badawy, Felix Hundhausen, Julian Höfer, Naveen-Shankar Nagaraja, Christian Unger, Hans-Jörg Vögel, Jürgen Becker, Tamim Asfour, and Walter Stechele. Binary-lorax: Low-power and runtime adaptable xnor classifier for semi-autonomous grasping with prosthetic hands. In International Conference on Robotics and Automation (ICRA), 2021. [ http ] |

| [4] | Fabian Paus and Tamim Asfour. Probabilistic representation of objects and their support relations. In International Symposium on Experimental Robotics (ISER), 2020. |

| [5] | Fabian Paus, Teng Huang, and Tamim Asfour. Predicting pushing action effects on spatial object relations by learning internal prediction models. In IEEE International Conference on Robotics and Automation (ICRA), pages 10584–10590, 2020. |

| [6] | Felix Hundhausen, Denis Megerle, and Tamim Asfour. Resource-aware object classification and segmentation for semi-autonomous grasping with prosthetic hands. In IEEE/RAS International Conference on Humanoid Robots (Humanoids), pages 215–221, 2019. |

| [7] | Akshay Srivatsa, Sven Rheindt, Dirk Gabriel, Thomas Wild, and Andreas Herkersdorf. Cod: Coherence-on-demand – runtime adaptable working set coherence for dsm-based manycore architectures. In Dionisios N. Pnevmatikatos, Maxime Pelcat, and Matthias Jung, editors, Embedded Computer Systems: Architectures, Modeling, and Simulation, pages 18–33, Cham, 2019. Springer International Publishing. |

| [8] | Dirk Gabriel, Walter Stechele, and Stefan Wildermann. Resource-aware parameter tuning for real-time applications. In Martin Schoeberl, Christian Hochberger, Sascha Uhrig, Jürgen Brehm, and Thilo Pionteck, editors, Architecture of Computing Systems – ARCS 2019, pages 45–55. Springer International Publishing, 2019. [ DOI ] |

| [9] | Daniel Krauß, Philipp Andelfinger, Fabian Paus, Nikolaus Vahrenkamp, and Tamim Asfour. Evaluating and optimizing component-based robot architectures using network simulation. In Winter Simulation Conference, Gothenburg, Sweden, December 2018. |

| [10] | Rainer Kartmann, Fabian Paus, Markus Grotz, and Tamim Asfour. Extraction of physically plausible support relations to predict and validate manipulation action effects. IEEE Robotics and Automation Letters (RA-L), 3(4):3991–3998, October 2018. [ DOI ] |

| [11] | Johny Paul. Image Processing on Heterogeneous Multiprocessor System-on-Chip using Resource-aware Programming. Dissertation, Technische Universität München, July 25, 2017. |

| [12] | Fabian Paus, Peter Kaiser, Nikolaus Vahrenkamp, and Tamim Asfour. A combined approach for robot placement and coverage path planning for mobile manipulation. In IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pages 6285–6292, 2017. |

| [13] | Jürgen Teich. Invasive computing – editorial. it – Information Technology, 58(6):263–265, November 24, 2016. [ DOI ] |

| [14] | Stefan Wildermann, Michael Bader, Lars Bauer, Marvin Damschen, Dirk Gabriel, Michael Gerndt, Michael Glaß, Jörg Henkel, Johny Paul, Alexander Pöppl, Sascha Roloff, Tobias Schwarzer, Gregor Snelting, Walter Stechele, Jürgen Teich, Andreas Weichslgartner, and Andreas Zwinkau. Invasive computing for timing-predictable stream processing on MPSoCs. it – Information Technology, 58(6):267–280, September 30, 2016. [ DOI ] |

| [15] | Manfred Kröhnert. A Contribution to Resource-Aware Architectures for Humanoid Robots. Dissertation, High Performance Humanoid Technologies (H2T), KIT-Faculty of Informatics, Karlsruhe Institute of Technology (KIT), Germany, July 22, 2016. |

| [16] | Manfred Kröhnert, Raphael Grimm, Nikolaus Vahrenkamp, and Tamim Asfour. Resource-Aware Motion Planning. In IEEE International Conference on Robotics and Automation (ICRA), pages 32–39, May 2016. [ DOI ] |

| [17] | Mirko Wächter, Simon Ottenhaus, Manfred Kröhnert, Nikolaus Vahrenkamp, and Tamim Asfour. The ArmarX Statechart Concept: Graphical Programming of Robot Behaviour. Frontiers in Robotics and AI, 3(33), 2016. [ DOI ] |

| [18] | Johny Paul, Walter Stechele, Benjamin Oechslein, Christoph Erhardt, Jens Schedel, Daniel Lohmann, Wolfgang Schröder-Preikschat, Manfred Kröhnert, Tamim Asfour, Éricles R. Sousa, Vahid Lari, Frank Hannig, Jürgen Teich, Artjom Grudnitsky, Lars Bauer, and Jörg Henkel. Resource-awareness on heterogeneous MPSoCs for image processing. Journal of Systems Architecture, 61(10):668–680, November 6, 2015. [ DOI ] |

| [19] | N. Vahrenkamp, M. Wächter, M. Kröhnert, K. Welke, and T. Asfour. The robot software framework armarx. Information Technology, 57(2):99–111, 2015. |

| [20] | Johny Paul, Benjamin Oechslein, Christoph Erhardt, Jens Schedel, Manfred Kröhnert, Daniel Lohmann, Walter Stechele, Tamim Asfour, and Wolfgang Schröder-Preikschat. Self-adaptive corner detection on mpsoc through resource-aware programming. Journal of Systems Architecture, 2015. [ DOI ] |

| [21] | Johny Paul, Walter Stechele, Éricles R. Sousa, Vahid Lari, Frank Hannig, Jürgen Teich, Manfred Kröhnert, and Tamim Asfour. Self-adaptive harris corner detector on heterogeneous many-core processor. In Proceedings of the Conference on Design and Architectures for Signal and Image Processing (DASIP). IEEE, October 2014. [ DOI ] |

| [22] | Manfred Kröhnert, Nikolaus Vahrenkamp, Johny Paul, Walter Stechele, and Tamim Asfour. Resource prediction for humanoid robots. In Proceedings of the First Workshop on Resource Awareness and Adaptivity in Multi-Core Computing (Racing 2014), pages 22–28, May 2014. [ arXiv ] |

| [23] | Éricles Sousa, Vahid Lari, Johny Paul, Frank Hannig, Jürgen Teich, and Walter Stechele. Resource-aware computer vision application on heterogeneous multi-tile architecture. Hardware and Software Demo at the University Booth at Design, Automation and Test in Europe (DATE), Dresden, Germany, March 2014. |

| [24] | Johny Paul, Walter Stechele, Manfred Kröhnert, Tamim Asfour, Benjamin Oechslein, Christoph Erhardt, Jens Schedel, Daniel Lohmann, and Wolfgang Schröder-Preikschat. Resource-aware harris corner detection based on adaptive pruning. In Proceedings of the Conference on Architecture of Computing Systems (ARCS), number 8350 in LNCS, pages 1–12. Springer, February 2014. [ DOI ] |

| [25] | Johny Paul, Walter Stechele, Manfred Kröhnert, Tamim Asfour, Benjamin Oechslein, Christoph Erhardt, Jens Schedel, Daniel Lohmann, and Wolfgang Schröder-Preikschat. A resource-aware nearest neighbor search algorithm for K-dimensional trees. In Proceedings of the Conference on Design and Architectures for Signal and Image Processing (DASIP), pages 80–87. IEEE Computer Society Press, October 2013. |

| [26] | Éricles Sousa, Alexandru Tanase, Vahid Lari, Frank Hannig, Jürgen Teich, Johny Paul, Walter Stechele, Manfred Kröhnert, and Tamim Asfour. Acceleration of optical flow computations on tightly-coupled processor arrays. In Proceedings of the 25th Workshop on Parallel Systems and Algorithms (PARS), volume 30 of Mitteilungen – Gesellschaft für Informatik e. V., Parallel-Algorithmen und Rechnerstrukturen, pages 80–89. Gesellschaft für Informatik e.V., April 2013. |

| [27] | Kai Welke, Nikolaus Vahrenkamp, Mirko Wächter, Manfred Kröhnert, and Tamim Asfour. The armarx framework - supporting high level robot programming through state disclosure. In Informatik 2013 Workshop on robot control architectures, 2013. |

| [28] | David Schiebener, Julian Schill, and Tamim Asfour. Discovery, segmentation and reactive grasping of unknown objects. In 12th IEEE-RAS International Conference on Humanoid Robots (Humanoids), pages 71–77, November 2012. |

| [29] | Johny Paul, Andreas Laika, Christopher Claus, Walter Stechele, Adam El Sayed Auf, and Erik Maehle. Real-time motion detection based on sw/hw-codesign for walking rescue robots. Journal of Real-Time Image Processing, pages 1–16, 2012. [ DOI ] |

| [30] | Johny Paul, Walter Stechele, Manfred Kröhnert, Tamim Asfour, and Rüdiger Dillmann. Invasive computing for robotic vision. In Proceedings of the 17th Asia and South Pacific Design Automation Conference (ASP-DAC), pages 207–212, January 2012. [ DOI ] |

| [31] | Jürgen Teich, Jörg Henkel, Andreas Herkersdorf, Doris Schmitt-Landsiedel, Wolfgang Schröder-Preikschat, and Gregor Snelting. Invasive computing: An overview. In Michael Hübner and Jürgen Becker, editors, Multiprocessor System-on-Chip – Hardware Design and Tool Integration, pages 241–268. Springer, Berlin, Heidelberg, 2011. [ DOI ] |

| [32] | Jürgen Teich. Invasive algorithms and architectures. it - Information Technology, 50(5):300–310, 2008. |