A5: Scheduling Invasive Multicore Programs Under Uncertainty

Principal Investigators:

Scientific Researchers:

Dr. Bertrand Simon (09/2018-09/2020)

Alexander Lindermayr (10/2020-now)

Abstract

Project A5 is a new project joining the CRC/Transregio for the third funding period. It complements the project area A, Fundamentals, Language and Algorithm Research, with methods for coping with uncertainty, a central theme in Phase 3. Project A5 explores algorithms and analytical techniques for scheduling and resource management with uncertain input.

Synopsis

Scheduling and resource management are central tasks in many parts of invasive computing. Such problems are particularly challenging when real-time constraints are present, in the form of hard or soft deadlines that have to be met. Respecting deadlines is, however, not enough. Typically, precedence constraints among specific subtasks must be obeyed. In addition, hardware and software requirements as well as performance considerations impose further constraints on the compatibility between tasks and processors. Scheduling algorithms must handle various resources such as energy, bandwidth, and other resources. On top of that, in typical applications parts of the input data are uncertain. This can be uncertain tasks sets, unknown arrival times, fluctuations in task execution times, work load or power consumption, or uncertainty about the processor state.

The goal of this project is to develop algorithms that can handle uncertain input data. We design and mathematically analyse algorithms for scheduling and resource management using the invasive computing paradigm. Our methods shall give performance guarantees concerning the predictability and also quantify how hardware and software requirements affect the performance and predictability. This may reveal also optimisation potential in the systems architecture or algorithms.

Approach

We consider complex multiprocessor scheduling tasks with real-time constraints, in the form of hard or soft deadlines, with precedence constraints among specific subtasks, and various kinds of resources such as energy, bandwidth, and other resources. We investigate primarily the following kinds of uncertainty that are typical in practical applications:

- fluctuations in task execution times, modelled as random variables with distributional information or by uncertainty intervals

- uncertain task sets and arrival times modelled as online information

- uncertain arrival times that follow some periodic pattern (periodic real-time scheduling).

- uncertain task suspension when waiting for instance for input data

- conditional task sets and dependencies revealed only at runtime based on execution results

- no certainty on the future is possible but predictions are available: the objective is then to design algorithms that perform well if these predictions are accurate but also provide guarantees regardless of the prediction quality.

The proposed project is of foundational character and aims for theoretical guarantees by building on methods from combinatorial optimisation, mathematical programming, scheduling theory, approximation and online algorithms. Our main focus lies on provable worst-case guarantees. When predictability is crucial, e.g. in safety-critical applications, multicore systems still rely on single-core usage. The reason is that the current state-of-the-art approaches in real-time scheduling do not capture the difficulties in scheduling parallel workloads in a predictable way. However, to fully unfold the power of multicore technology, it must include safety-critical functionalities. For safety-critical functionalities it is indispensable to reliably predict whether a given task will be computed in time, that is, we ask for reliability even in a worst case.

In this project we develop algorithms with worst-case guarantees regarding non-functional properties such as timing, resource utilisation, adaptivity, speed-factors and power consumption. We advance the theoretical foundations to exploit the power of parallel computing and particularly the benefits of invasive computing also for safety-critical applications. We expect to develop algorithms that are not only efficient from a theoretical standpoint but can be efficiently implemented in practice.

The main contributions of this project are summarized below.

Energy-Minimization in DAG Scheduling

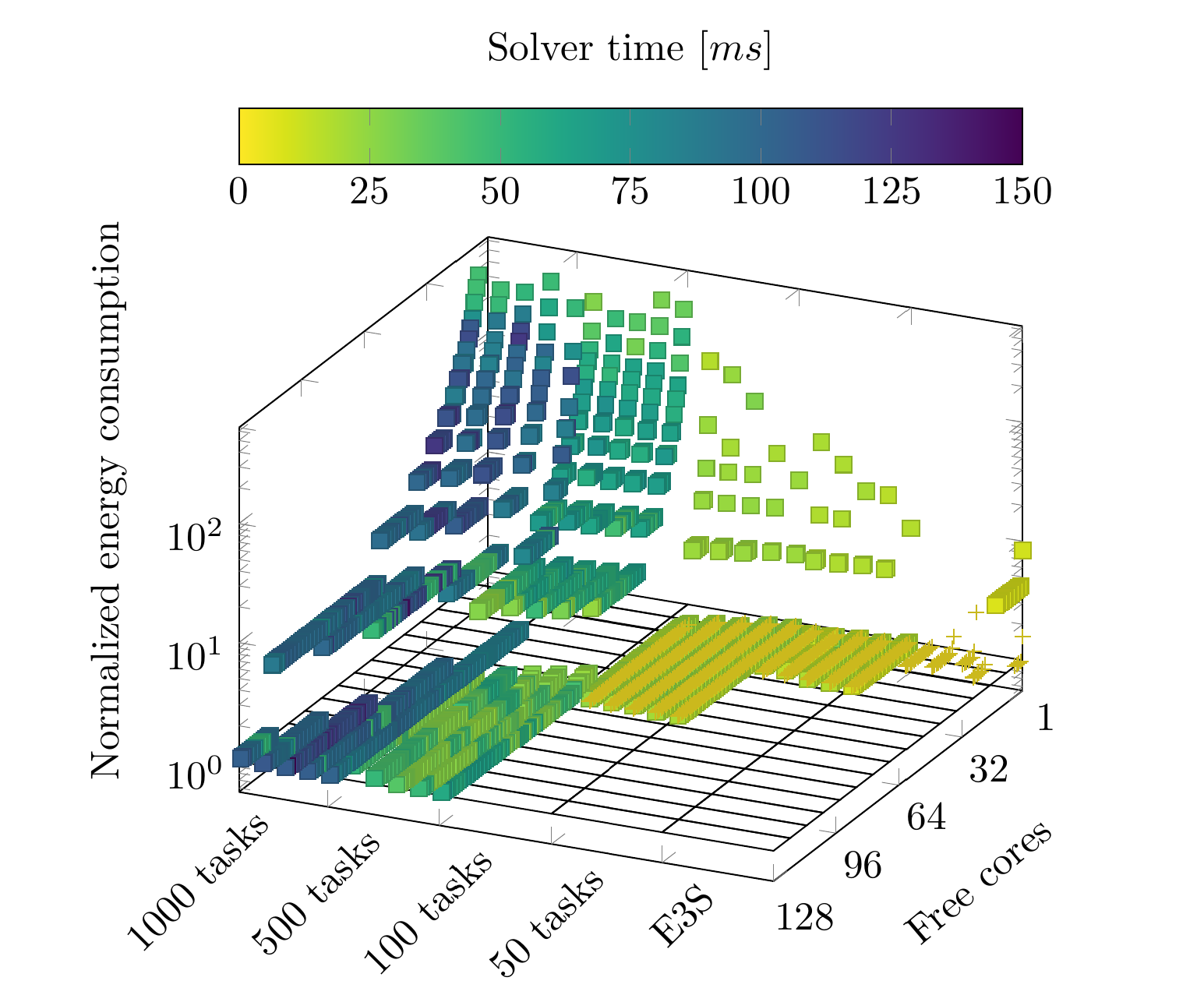

Static (offline) techniques for mapping applications given by task graphs to MPSoC systems often deliver overly pessimistic and thus suboptimal results w.r.t. exploiting time slack in order to minimize the energy consumption. This holds true in particular in case computation times of tasks may be workload-dependent and becoming known only at runtime or in case of conditionally executed tasks or scenarios. We present and analyze algorithms for scheduling such periodic applications and provide provably optimal results as well as approximation algorithms with proven guarantees on the achieved energy savings.

Consumed energy of our approach in empirical experiments.

Designing Algorithms using Untrusted Predictions

A large disadvantage of machine-learned predictors is that we cannot expect correct predictions in all situations. Still, decision-making systems that are based on such predictors need not only to benefit from good predictions but also to achieve a decent performance when the predictions are inadequate. We propose a robust prediction setup for arbitrary metrical task systems (MTS) (e.g., caching, k-server and convex body chasing) and online matching on the line. Specifically for caching, we present an algorithm whose performance, as a function of the prediction's quality, is exponentially better than what is achievable for general MTS. Finally, we present an empirical evaluation of our methods on real world datasets, which suggests practicality.

Conditional DAG Scheduling

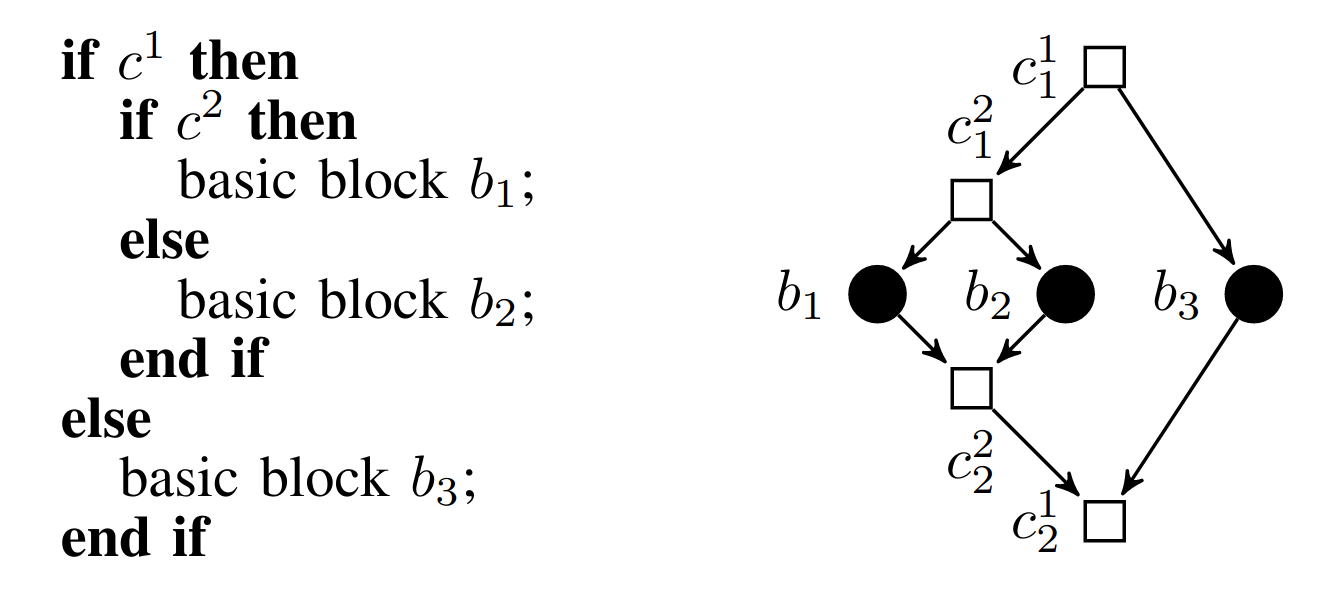

One of the main models for parallel applications is a DAG of tasks, where edges represent precedence constraints. The conditional DAG model allows the presence of control structures such as conditional (if-then-else) constructs. We perform a thorough analysis on the worst-case makespan (latest completion time) of a conditional DAG task under list scheduling (a.k.a. fixed-priority scheduling). We show several hardness results concerning the complexity of the optimization problem on multiple processors. Complementing these negative results, we show that certain practice-relevant DAG structures are very well tractable.

Example source code and its representation as a conditional DAG.

Scheduling Self-Suspending Tasks

In computing systems, a job may suspend itself (before it finishes its execution) when it has to wait for certain results from other (usually external) activities. Such self-suspension behavior has been shown to induce performance degradation in real-time systems. For frame-based periodic real-time tasks, in which the periods of all tasks are identical and all jobs related to one frame are released synchronously, we explore different approximation metrics with respect to resource augmentation factors under different scenarios for both uniprocessor and multiprocessor systems. Our experimental results show that such more carefully designed schedules can significantly outperform the state-of-the-art.

Scheduling on Heteregoneous Processors

Modern platforms are using accelerators in conjunction with standard processing units in order to reduce the running time of specific operations, such as matrix operations. We consider the problem of scheduling a set of tasks subject to precedence constraints on hybrid platforms, composed of two types of processing units. We improve the best known approximation algorithm and propose the first conditional lower bounds specific to this setting. In a separate work, we have conducted a survey classifying the main algorithms existing to handle different settings of hybrid platform scheduling and propose unified implementations to evaluate them on a large set of benchmarks.

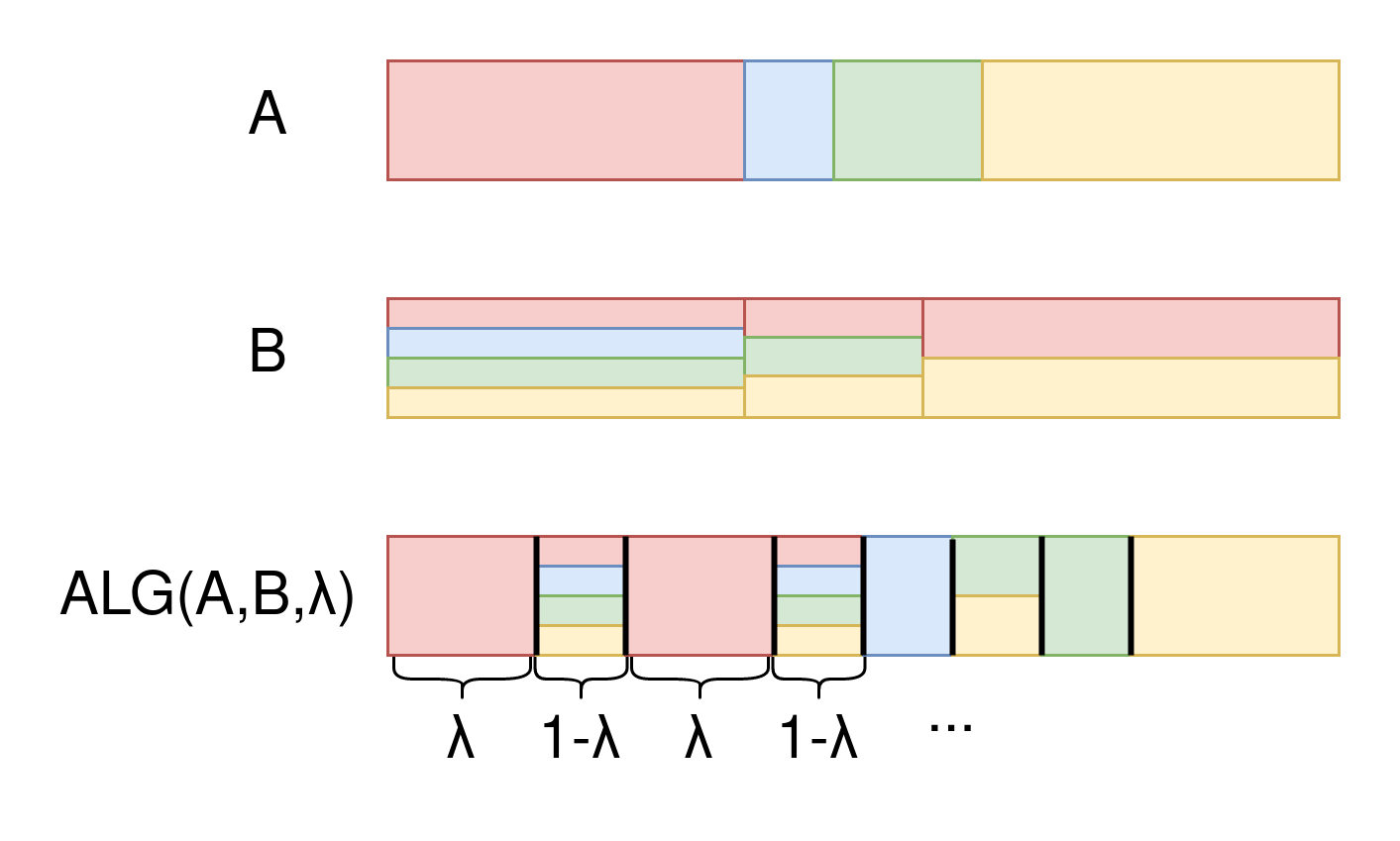

Prediction-clairvoyant scheduling

In many realistic scheduling scenarios, the precise processing times of the jobs are uncertain. Hence, it is often assumed that they are completely unknown. The classic preemptive approach for this problem is the famous Round-Robin algorithm, which distributes the resources fairly among all unfinished jobs. However, this setting often is overly pessimistic, as in many applications we have a rough estimate of a job's length, e.g. using simple statistics or machine learning. We consider using such untrusted predictions to design algorithms that improve over long-standing lower bounds given sufficiently good predictions, and also demonstrate their applicability and improvements on real world data in empirical experiments.

Preemptive robustification of following a predicted schedule (A) by using Round-Robin (B).

News

- May 20, 2021: Alexander Lindermayr's Master's Thesis "Learning-Augmented Online Algorithms for the 2-Server Problem on the Line and Generalizations" was nominated for the Bremer Studienpreis award by the Faculty of Mathematics and Computer Science of the University of Bremen.

- Sept 30, 2020: Bertrand Simon joins as a CNRS researcher the IN2P3 Computing Center in Villeurbanne/Lyon.

| [1] | Nidhi Anantharajaiah, Tamim Asfour, Michael Bader, Lars Bauer, Jürgen Becker, Simon Bischof, Marcel Brand, Hans-Joachim Bungartz, Christian Eichler, Khalil Esper, Joachim Falk, Nael Fasfous, Felix Freiling, Andreas Fried, Michael Gerndt, Michael Glaß, Jeferson Gonzalez, Frank Hannig, Christian Heidorn, Jörg Henkel, Andreas Herkersdorf, Benedict Herzog, Jophin John, Timo Hönig, Felix Hundhausen, Heba Khdr, Tobias Langer, Oliver Lenke, Fabian Lesniak, Alexander Lindermayr, Alexandra Listl, Sebastian Maier, Nicole Megow, Marcel Mettler, Daniel Müller-Gritschneder, Hassan Nassar, Fabian Paus, Alexander Pöppl, Behnaz Pourmohseni, Jonas Rabenstein, Phillip Raffeck, Martin Rapp, Santiago Narváez Rivas, Mark Sagi, Franziska Schirrmacher, Ulf Schlichtmann, Florian Schmaus, Wolfgang Schröder-Preikschat, Tobias Schwarzer, Mohammed Bakr Sikal, Bertrand Simon, Gregor Snelting, Jan Spieck, Akshay Srivatsa, Walter Stechele, Jürgen Teich, Furkan Turan, Isaías A. Comprés Ureña, Ingrid Verbauwhede, Dominik Walter, Thomas Wild, Stefan Wildermann, Mario Wille, Michael Witterauf, and Li Zhang. Invasive Computing. FAU University Press, August 16, 2022. [ DOI ] |

| [2] | Alexander Lindermayr and Nicole Megow. Non-clairvoyant scheduling with predictions revisited. CoRR, abs/2202.10199, 2022. |

| [3] | Alexander Lindermayr, Nicole Megow, and Bertrand Simon. Double coverage with machine-learned advice. In ITCS, volume 215 of LIPIcs, pages 99:1–99:18. Schloss Dagstuhl - Leibniz-Zentrum für Informatik, 2022. |

| [4] | Lin Chen, Nicole Megow, Roman Rischke, Leen Stougie, and José Verschae. Optimal algorithms for scheduling under time-of-use tariffs. Ann. Oper. Res., 304(1):85–107, 2021. |

| [5] | Franziska Eberle, Ruben Hoeksma, Nicole Megow, Lukas Nölke, Kevin Schewior, and Bertrand Simon. Speed-robust scheduling - sand, bricks, and rocks. In IPCO, volume 12707 of Lecture Notes in Computer Science, pages 283–296. Springer, 2021. |

| [6] | Martin Böhm, Nicole Megow, and Jens Schlöter. Throughput scheduling with equal additive laxity. In CIAC, volume 12701 of Lecture Notes in Computer Science, pages 130–143. Springer, 2021. |

| [7] | Vincent Fagnon, Imed Kacem, Giorgio Lucarelli, and Bertrand Simon. Scheduling on hybrid platforms: Improved approximability window. In Proceedings of the Latin American Theoretical Informatics Symposium, 2020. |

| [8] | Olivier Beaumont, Louis-Claude Canon, Lionel Eyraud-Dubois, Giorgio Lucarelli, Loris Marchal, Clément Mommessin, Bertrand Simon, and Denis Trystram. Scheduling on two types of resources: A survey. ACM Computing Surveys, 2020. |

| [9] | Antonios Antoniadis, Christian Coester, Marek Elias, Adam Polak, and Bertrand Simon. Online metric algorithms with untrusted predictions. In Proceedings of the Thirty-Seventh International Conference on Machine Learning (ICML), 2020. |

| [10] | Bertrand Simon, Joachim Falk, Nicole Megow, and Jürgen Teich. Energy minimization in DAG scheduling on MPSoCs at run-time: Theory and practice. In Proceedings of the Workshop on Next Generation Real-Time Embedded Systems (NG-RES), pages 2:1–2:13, 2020. [ DOI ] |

| [11] | Alberto Marchetti-Spaccamela, Nicole Megow, Jens Schlöter, Martin Skutella, and Leen Stougie. On the complexity of conditional dag scheduling in multiprocessor systems. In Proceedings of the IEEE International Parallel and Distributed Processing Symposium (IPDPS), 2020. |

| [12] | Franziska Eberle, Nicole Megow, and Kevin Schewior. Optimally handling commitment issues in online throughput maximization. In 28th Annual European Symposium on Algorithms, ESA 2020, September 7-9, 2020, Pisa, Italy (Virtual Conference), volume 173 of LIPIcs, pages 41:1–41:15. Schloss Dagstuhl - Leibniz-Zentrum für Informatik, 2020. [ DOI ] |

| [13] | Lin Chen, Franziska Eberle, Nicole Megow, Kevin Schewior, and Clifford Stein. A general framework for handling commitment in online throughput maximization. Math. Program., 183(1):215–247, 2020. [ DOI ] |

| [14] | Franziska Eberle, Felix Fischer, Jannik Matuschke, and Nicole Megow. On index policies for stochastic minsum scheduling. Operations Research Letters, 47(3):213–218, 2019. |

| [15] | Jian-Jia Chen, Tobias Hahn, Ruben Hoeksma, Nicole Megow, and Georg von der Brüggen. Scheduling self-suspending tasks: New and old results. In Proceedings of the 31st Euromicro Conference on Real-Time Systems (ECRTS 2019), 2019. |